Tutorial: Use Transfer Learning to ReBuild previous Machine Learning Model builds using new data¶

In machine learning, transfer learning is the process of re-training or re-purposing a previously built ML model for a new problem or domain. Imagine having a built a QSAR model on solubility on a dataset of size x. Now, a new set of data whose solubility has been synthesized, comes in; with the size a fraction of x. These molecules are different from the intial training set either based on moeity or pharmacaphore. We would like to update our previous model to include knowledge of this new data. Consider yet another similar but different problem of built a QSAR model on thermodynamic solubility. We would like to repurpose this model to predict kinetic solubility, as the size of the training data for kinetic solubility is not enought to build a robust model. In both these examples, we wish to re-use previously built robust ML models to extend to a new domain or a new but similar problem.

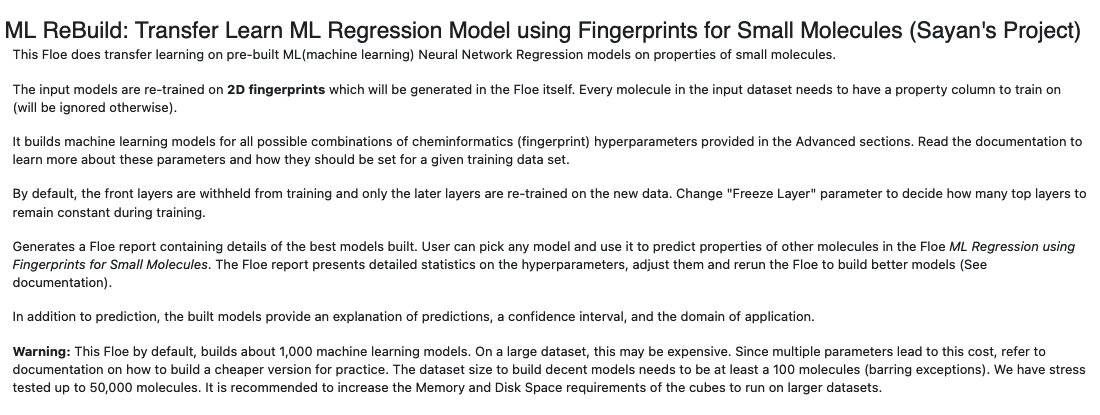

In this tutorial we will see how to use the floe ML ReBuild: Transfer Learn ML Regression Model using Fingerprints for Small Molecules to train a previously build ML model using transfer learning. Note: we will provide you with a built ML model, but incase you want to build one from scratch, refer to the tutorial Building Machine Learning Regression Models for Property Prediction of Small Molecules.

Create a Tutorial Project¶

Note

If you have already created a Tutorial project you can re-use the existing one.

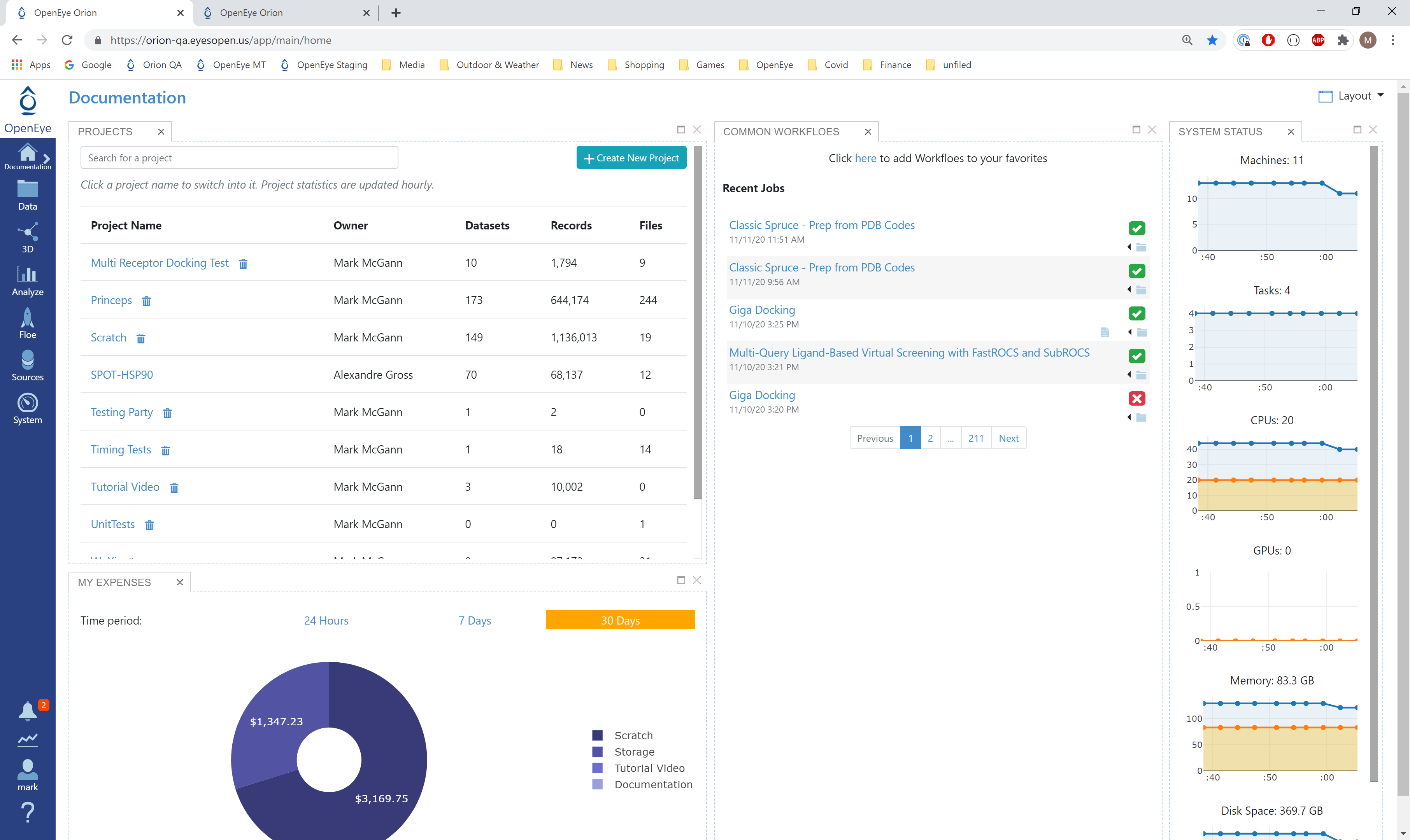

Log into Orion and click the home button at the top of the blue ribbon on the left of the Orion Interface. Then click on the ‘Create New Project’ button and in the pop up window enter Tutorial for the name of the project and click ‘Save’.

Orion home page¶

Floe Input¶

The inputs required for the Floe are:

Molecule Dataset (P_1) with float response value

Tensorflow Machine Learning Model Dataset (M_1) to transfer learn on

For P_1, uploading .csv, .sdf or similar formats in Orion, automatically converts it to a dataset. The solubility dataset contains several OERecord (s). The floe expects two things from each record:

An OEMol which is the molecule to train the models on

A Float value which contains the regression property to be learnt. For this example, it is the Solubility (loguM) value.

Here is a sample record from the dataset:

OERecord (

*Molecule(Chem.Mol)* : c1ccc(c(c1)NC(=O)N)OC[C@H](CN2CCC3(CC2)Cc4cc(ccc4O3)Cl)O

*Solubility loguM(Float)* : 3.04

)

The M_1 dataset contains one or more machine learning models. To learn how to generate these models, read previous tutorials on building models in Orion.

Input Machine Learning Models

Input Training Dataset

The model has been trained on loguM solubility values from AZ chembl data. The re-training dataset is from a different version of chembl with no common match.

To start off, the input needs to be selected in the parameter Input Small Molecules to train machine learning models on.

We can change the default names of the Outputs or leave them as is.

The property to be trained on goes in the Response Value Field under the Options tab. Note that this response value needs to exactly match with the response value on which the models in M_1 were trained.

Run OEModel Building Floe¶

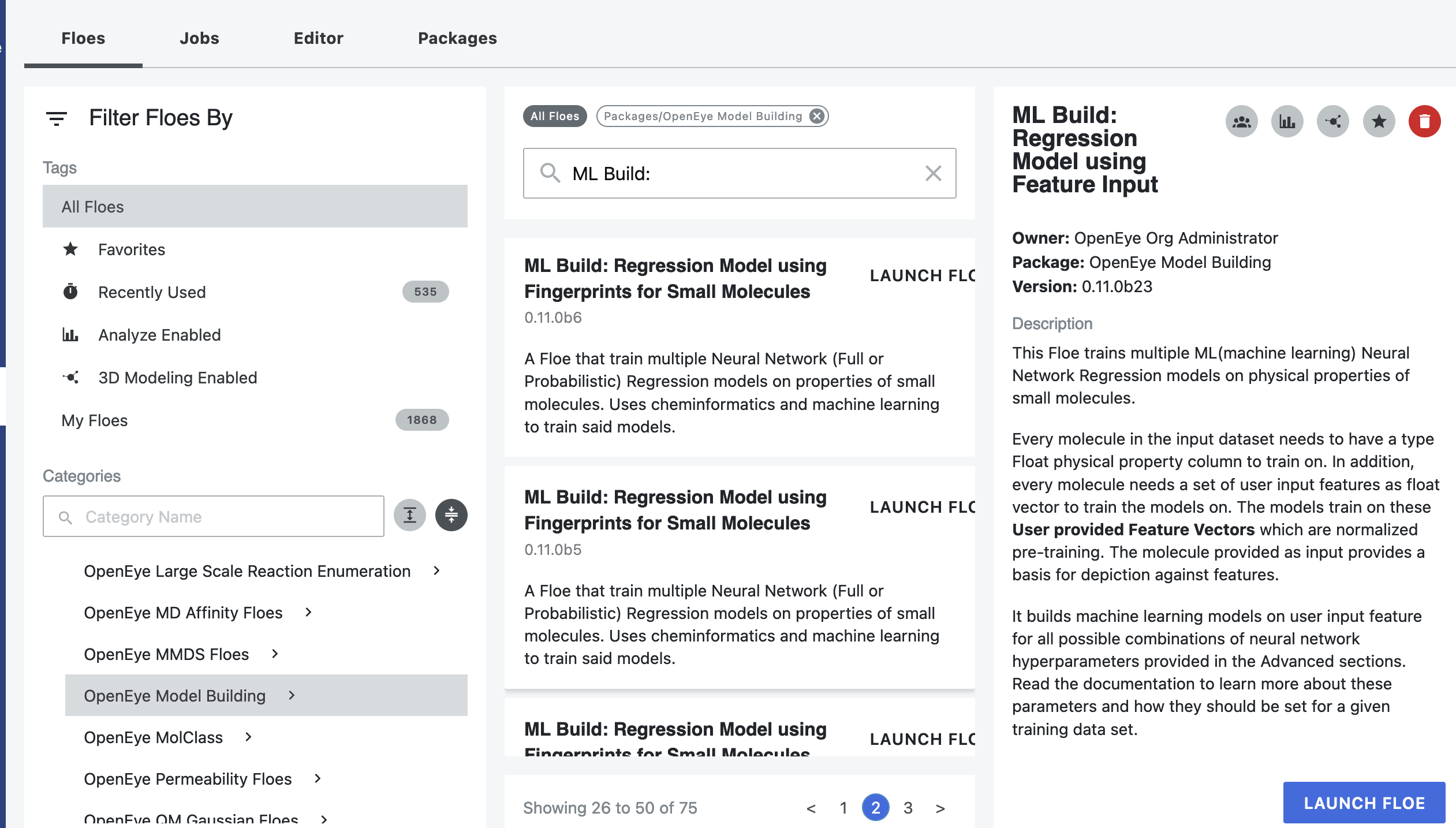

Click on the ‘Floes’ button in the left menu bar

Click on the ‘Floes’ tab

Under the ‘Categories’ tab select ‘OpenEye Model Building’ package

In the search bar enter ML ReBuild

A list of Floes will now be visible to the right

Launch the floe ML ReBuild: Transfer Learn ML Regression Model using Fingerprints for Small Molecules and a Job Form will pop up. Specify the following parameter settings in the Job Form.

Click on the ‘Input Small Molecules to train machine learning models’ on button

Select the given dataset P_1 or your own dataset.

Click on the ‘Input tensorflow Model’ button

Selected the pretrained solubility model M_1 or your own trained model

Under Outputs, change the name of the ML models built to be saved in Orion. We will keep it to defaults for this tutorial.

Under the ‘Options’ tab, do the following:

For the ‘Select models for transfer learning training’ option: in case your input ML model dataset have more than one model, you need to pick which model you want to transfer learn on. If the default -1 is retained, it will train all the available models in parallel. This can be expensive if the M_1 you choose has many models.

For our example, our M_1 has only one model with model id 2040. So keeping Model select to -1 or setting it to 2040 will have the same result.

Select the response value which the model will train on under the Options tab. This field dynamically generates a list of columns to choose from based on the upload column. For our data, its Solubility(loguM). Again, this response value needs to exactly match with the response value on which the models in M_1 were trained.

Select how many model reports you want to see in the final floe report. This fields prevents memory blowup in case you generate >1k models. In such cases viewing the top 20-50 models should suffice.

Promoted parameter Preprocess Molecule :

Keeps the largest molecule if more than one present in a record.

Sets pH value to neutral.

Turn this parameter on.

You can apply the Blockbuster Filter if need be

In case it is necessary to transform the training values (Solubility in this case) to negative log, turn the Log. Neg Signal switch as well. Keep it off for this example.

As a general rule, you should select the last three options based on what you chose when building the original model

Finally, Select how you want to view the molecule explainer for machine learning results.

‘Atom’ annotates every atom by their degree of contribution towards final result.

‘Fragment’ does this for every molecule fragments (generated by the oemedchem tookit) and is the prefered method of med chemists.

‘Combined’ produces visualisations of both these techniques combined.

Changing layers to train on transfer learn

Under the cube parameter of the cube Parallel Transfer Learning Neural Network Regression Training Cube, there is a Layers to freeze parameter. It fixes How many layers in front of model to NOT train on. Increase if re-train data size smaller than initial training data.

That’s it! Lets go ahead and run the Floe!

Analysis of Output and Floe Report¶

After the floe has finished running, click the link on the ‘Floe Report’ tab in your window to preview the report. Since the report is big, it may take a while to load. Try refreshing or popping the report to a new window (located in the purple circle in the image below) if this is the case. All results reported in the Floe report are on the validation data. Note that the floe report is designed to give a comparative study of the different models built. In this run, since we have only one model we did transfer learning on, the graphs may be less insightful with one datapoints.

The analyze page looks similar to a regular model built floe, except that there is no hyperparamter tuning. We will refer to Analysis of Output and Floe Report section for this part.