Tutorial: Building Machine Learning Classifier Fingerprint Models for Physical Property Prediction of Small Molecules¶

OpenEye Model Building is a tool to build machine learning models that predict a physical property of small molecules.

In this tutorial, we will train several classification models to predict IC50 concentrations at which molecules become reactant to pyrrolamides. The IC50 responses are divided into ‘Low’, ‘Medium’ and ‘High’ based on a quartile based cutoff. The training data is known as the PyrrolamidesA dataset. The classification models will be built on a combination of fingerprint and neural network hyperparameters. The results will be visualized in both the analyze page and Floe report. We will leverage the Floe report to analyze and choose a good model. Finally, we will choose a built model to predict the property of some unseen molecules.

This tutorial uses the following Floe:

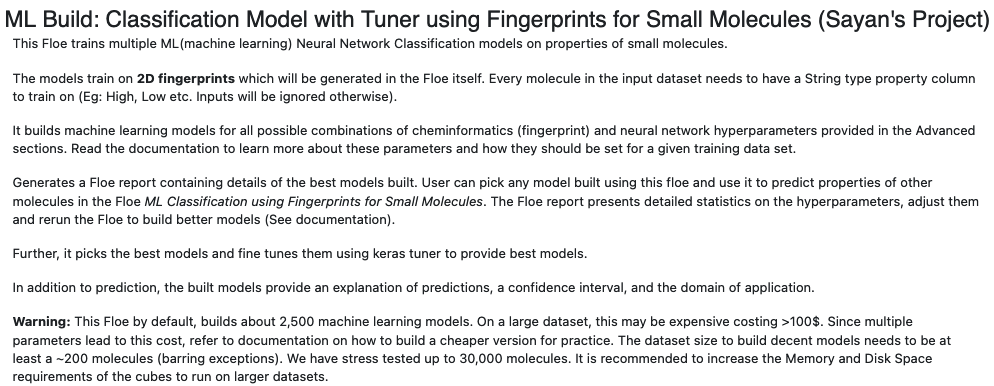

ML Build: Classification Model with Tuner using Fingerprints for Small Molecules.

Warning

This tutorial keeps default parameters and builds around 1k machine learning models. While the per model cost is very cheap, based on the size of dataset, total cost might be expensive. For instance, with the pyrrolamides dataset (~1k molecules) it costs around $100. To understand the working of the Floe, we suggest building lesser models by referring to the Cheaper and Faster Version of the tutorial. The floe in that tutorial builds regression models, but the idea to reduce parameters should hold for classification as well.

Create a Tutorial Project¶

Note

If you have already created a Tutorial project you can re-use the existing one.

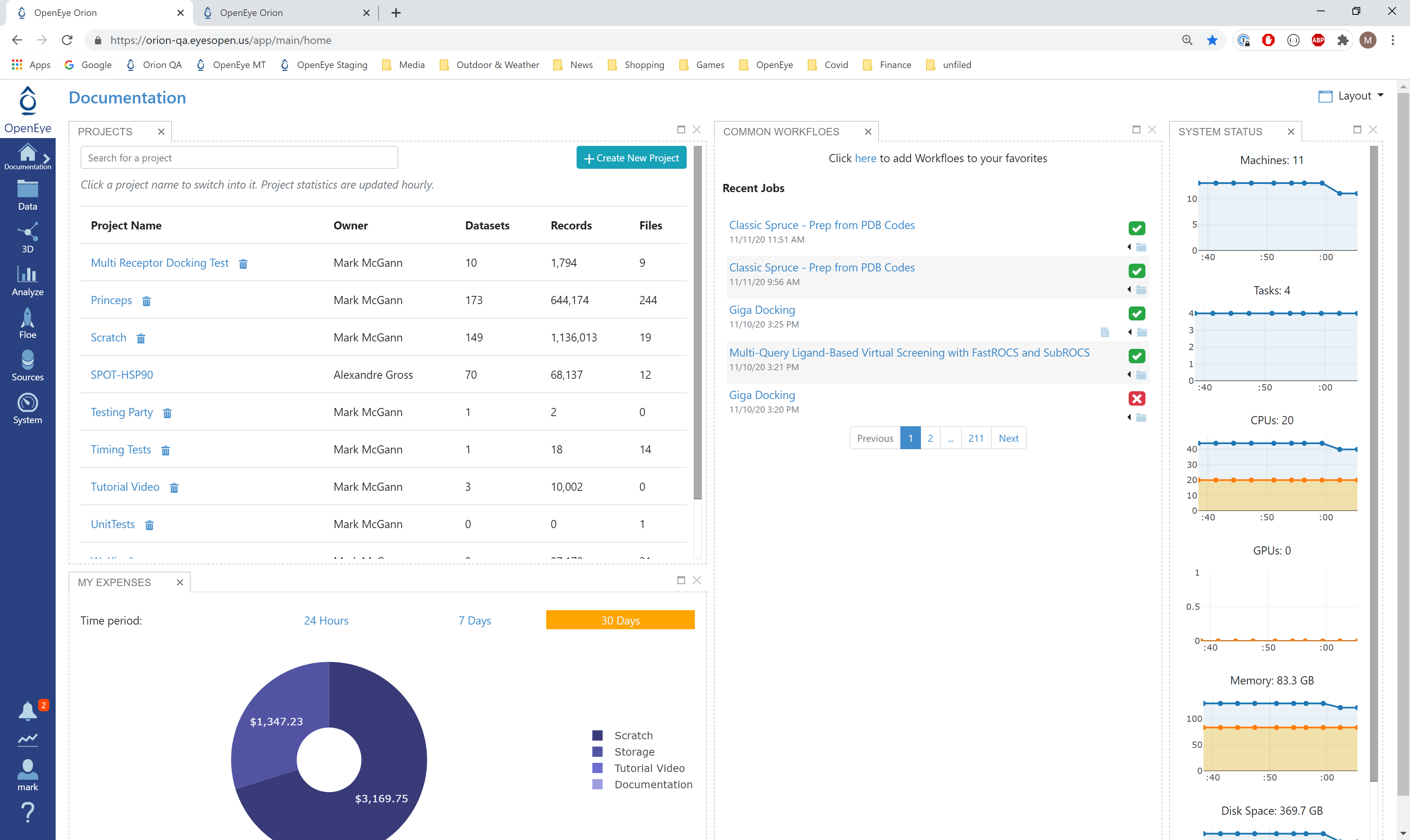

Log into Orion and click the home button at the top of the blue ribbon on the left of the Orion Interface. Then click on the ‘Create New Project’ button and in the pop up window enter Tutorial for the name of the project and click ‘Save’.

Orion home page¶

Floe Input¶

The Floe requires an input dataset file, “Input Small Molecules to train machine learning models on”, with each record in the file an OEMolField .

There needs to be a separate field in this file containing the String physical property to train the network on. This field needs to be selected in the promoted field parameter Response Value Field.

The PyrrolamidesA dataset contains several OERecord (s). As stated, the Floe expects two things from each record:

An OEMol which is the molecule to train the models on

A String value which contains the classification property to be learnt. For this example, it is the Low, Medium or High IC Class of the NegIC50 concentration.

Here is a sample record from the dataset:

OERecord (

*Molecule(Chem.Mol)* : c1cc(c(nc1)N2CCC(CC2)NC(=O)c3cc(c([nH]3)Cl)Cl)[N+](=O)[O-]

*IC Class(String)* : Low

)

Input Data

Run OEModel Building Floe¶

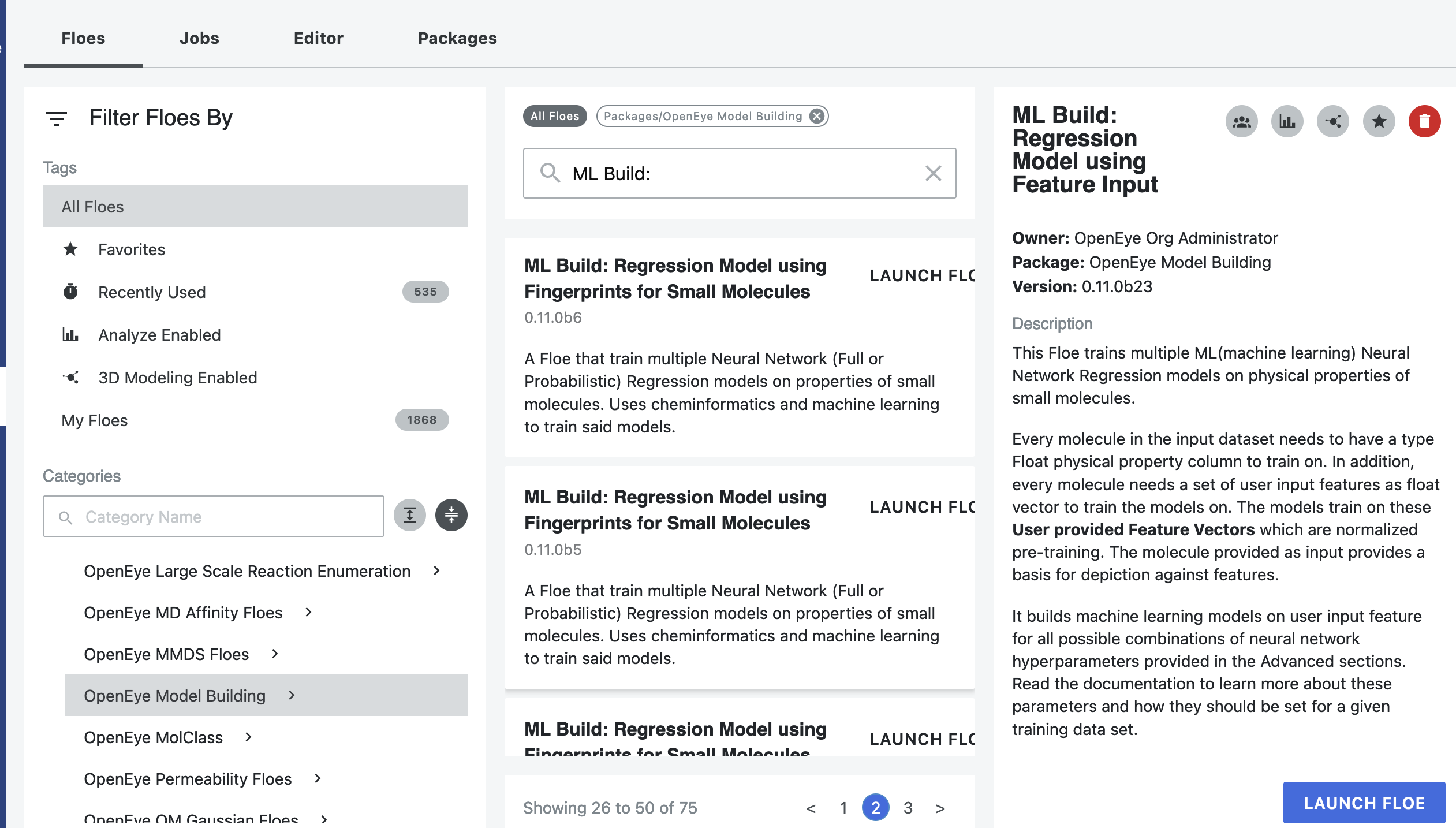

Click on the ‘Floes’ button in the left menu bar

Click on the ‘Floes’ tab

Under the ‘Categories’ tab select ‘OpenEye Model Building’ package

In the search bar enter ML Build

A list of Floes will now be visible to the right

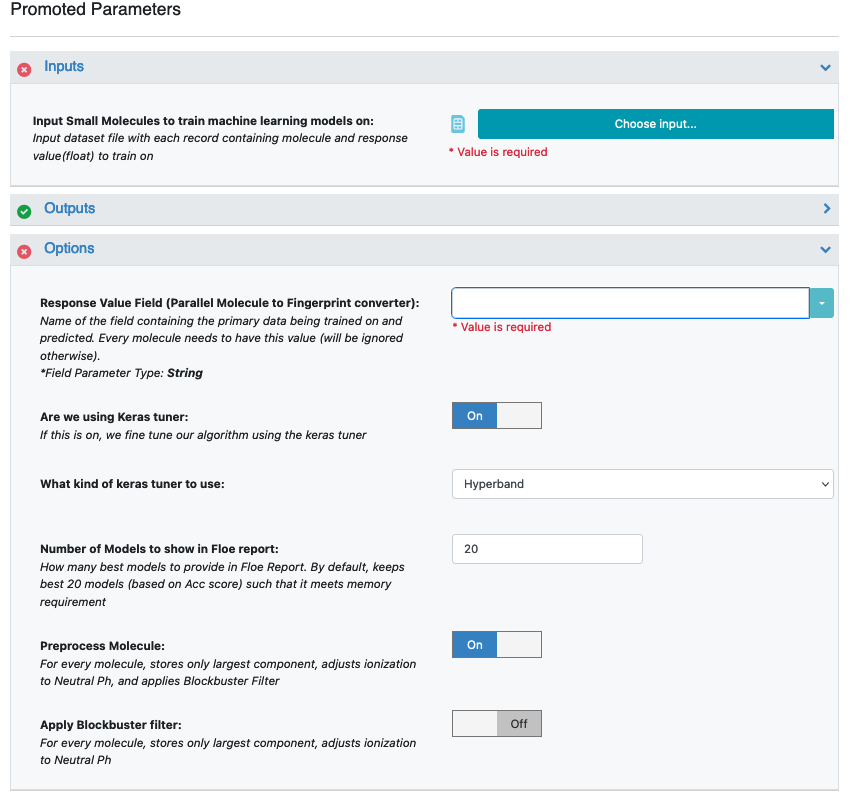

Launch the floe ML Build: Classification Model with Tuner using Fingerprints for Small Molecules and a Job Form will pop up. Specify the following parameter settings in the Job Form.

Click on the Input Small Molecules to Train Machine Learning Models on. button

Select the PyrrolamidesA dataset or your own dataset.

Change the name of the ML models built to be saved in Orion. We will keep it to defaults for this tutorial.

Under the Options tab, select the ‘Response Value Field’ which the model will train on. This field dynamically generates a list of string columns to choose from based on the uploaded dataset’s columns. For our data, its IC Class.

Parameter Are we using Keras tuner: Uses Hyperparameter optimizer using Keras Tuner. Keep this on by default

Parameter What kind of keras tuner to use: You can keep Hyperband by default or change to any of the other options.

The input molecules and the training field values can be filtered and transformed by the preprocessing units:

Parameter Preprocess Molecule :

Keeps the largest molecule if more than one is present in a record.

Sets pH value to neutral.

Parameter Blockbuster Filter :

Applies the Blockbuster Filter

Select how many model reports you want to see in the final Floe report. This field prevents memory blowup in case you generate >1k models. In such cases viewing the top 20-50 models should suffice.

Next, turn on the Boolean parameter Preprocess Molecule. This preps every molecule by removing salts, charge, and multiple components, keeping the largest molecule. It also sets the ionization of the molecule to neutral pH.

Optionally, apply the blockbuster filter as well

Select how you want to view the ‘Molecule Explainer Type’ for machine learning results prediction.

‘Atom’ annotates every atom by their degree of contribution towards final result.

‘Fragment’ does this for every molecule fragment (generated by the oemedchem tookit) and is the preferred method of medicinal chemists.

‘Combined’ produces visualisations of both these techniques combined.

Change the default name of outputs to something recognizable.

You can let the model run at this point and it should run succesfully with default parameters. However, we can tweak a few parameters to learn more about the functionality.

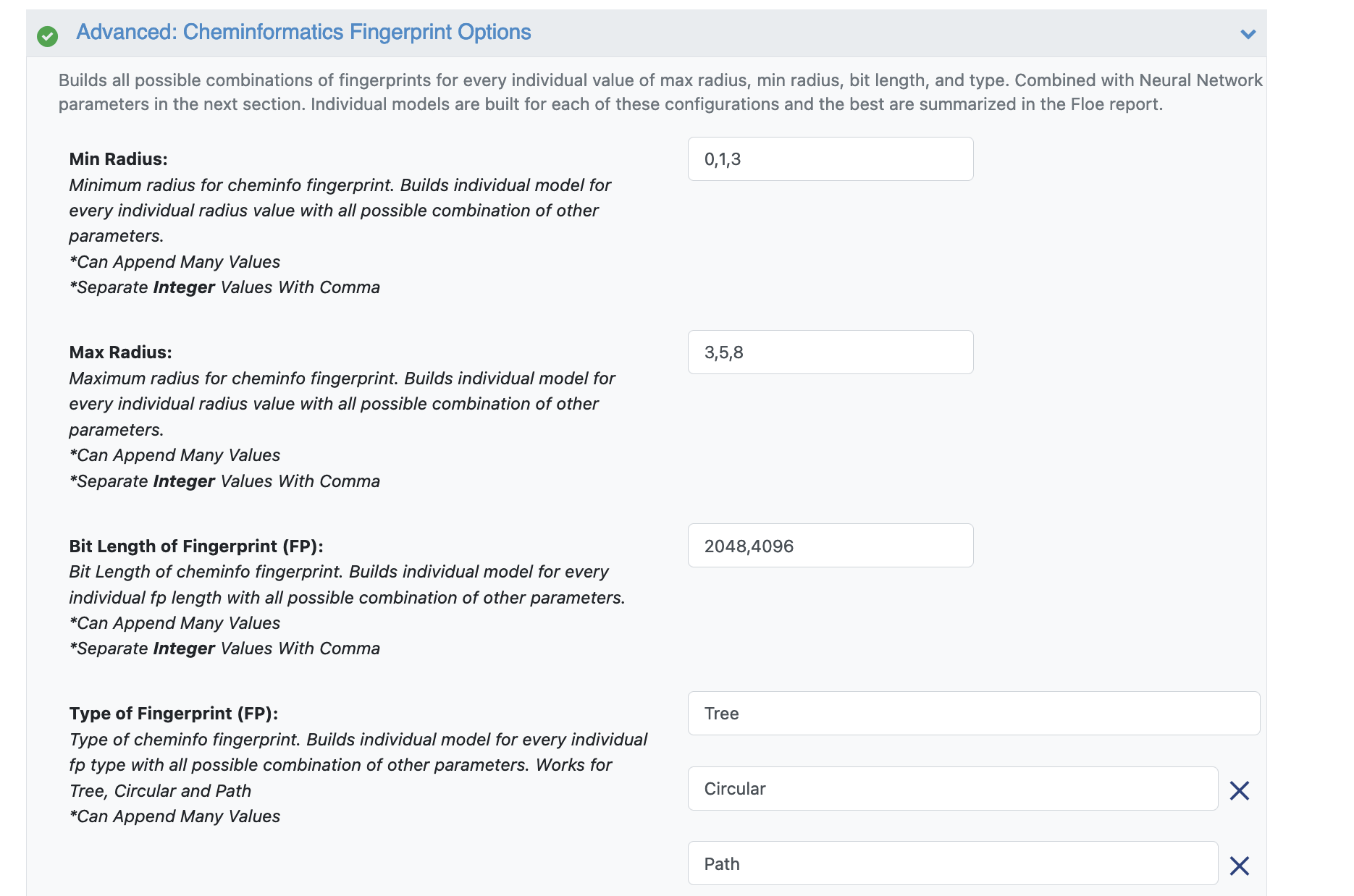

Open the Advanced: Cheminformatics Fingerprint Options. This tab has all the cheminformatics parameters the model will be built on. We can change or add more values to the fingerprint parameters as shown in the image.

For suggestions on tweaking, read the How-to-Guide on building Optimal Models

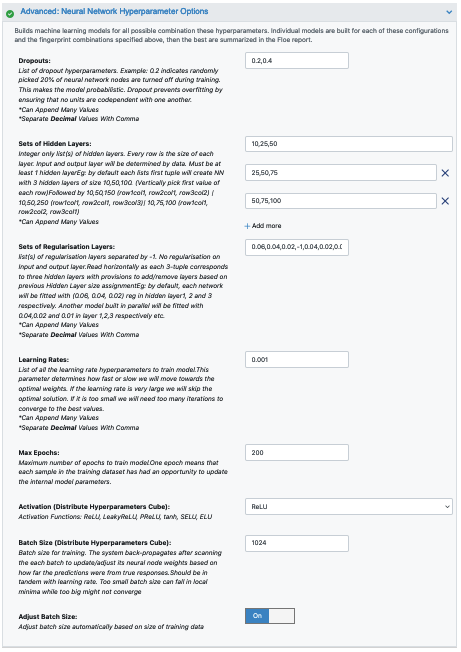

Next we move to the Advanced: Neural Network Hyperparameters Options. This is where the Machine Learning parameters are listed. Again, we can leave them as defaults, or choose to add/modify a few values based on the How-To-Guide.

We add Dropouts to prevent overfitting.

Next, let us inspect the parameter Sets of Hidden Layers.

Since it is a -1 separated list, by default there are 3-layer networks of size (250,150,50) and (150,100,80).

This gives a total node size of 330(150+100+80) and 240(100+80+60) in the default models.

Plugging in the formula stated in the How-to-Guide building Optimal Models, we should probably reduce the number of nodes to prevent overfitting.

Another important hyperparameter is the ‘Sets of regularisation Layers’. This field sets L2 regularisation for each network layer, making it a -1 separated 3-tuple list as well.

Increasing the Learning Rates is a way of speeding things up, although the algorithm may not detect the minima if this value is too high. We can train our model on multiple learning rate values. Leave defaults here.

If the dataset is big, we may consider increasing the Max Epochs size as it may take the algorithm longer to converge. Leave defaults here.

Activation: RelU is the most commonly used activation function for most models. Change to one of the options in the list if needed (see How-to-Guide on building Optimal Models).

The Batch Size defines the number of samples that will be propagated through the network. With larger batch sizes there is a significant degradation in the quality of the model, as measured by its ability to generalize. However, too small of a batch size may take the model a very long time to converge. For a dataset size of ~2k, 64 is probably a good batch size. However for datasets of saround 100k, we may want to increase batch size to at least 5k.

Set Batch size to 64

That’s it! Lets go ahead and run the Floe!

Note

The default memory requirement for each cube has been set to moderate to keep the price low. If you are training larger datasets, be mindful of the time it takes for the cubes to run into completion. Increasing the memory will let Orion assign instances with more processing power, thereby resulting in a faster completion. In some cases, the cubes may run out of memory (indicated in the log report) for large datasets. Increasing cube memory will fix this as well.

Analysis of Output and Floe Report¶

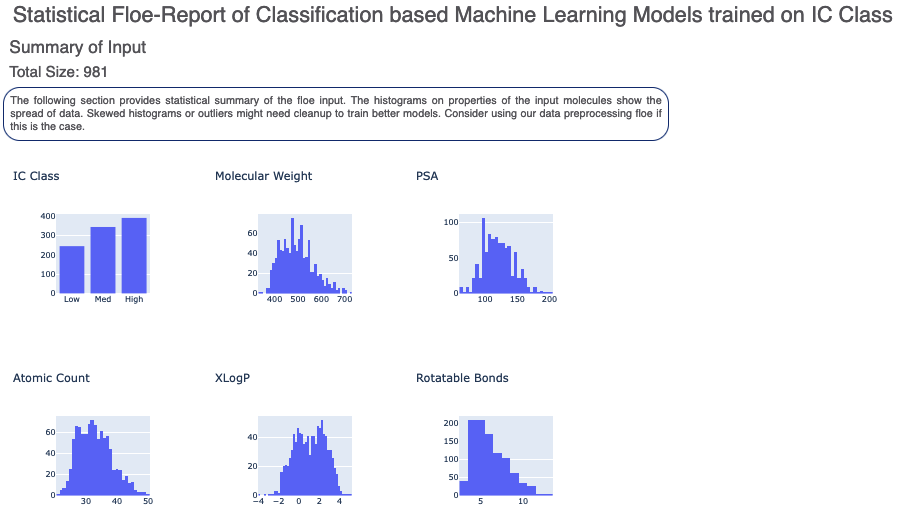

After the Floe has finished running, click the link on the ‘Floe Report’ tab in your window to preview the report. Since the report is big, it may take a while to load. Try refreshing or popping the report out to a new window if this is the case. All results reported in the Floe report are on the validation data. The top part summarizes statistics on the whole input data.

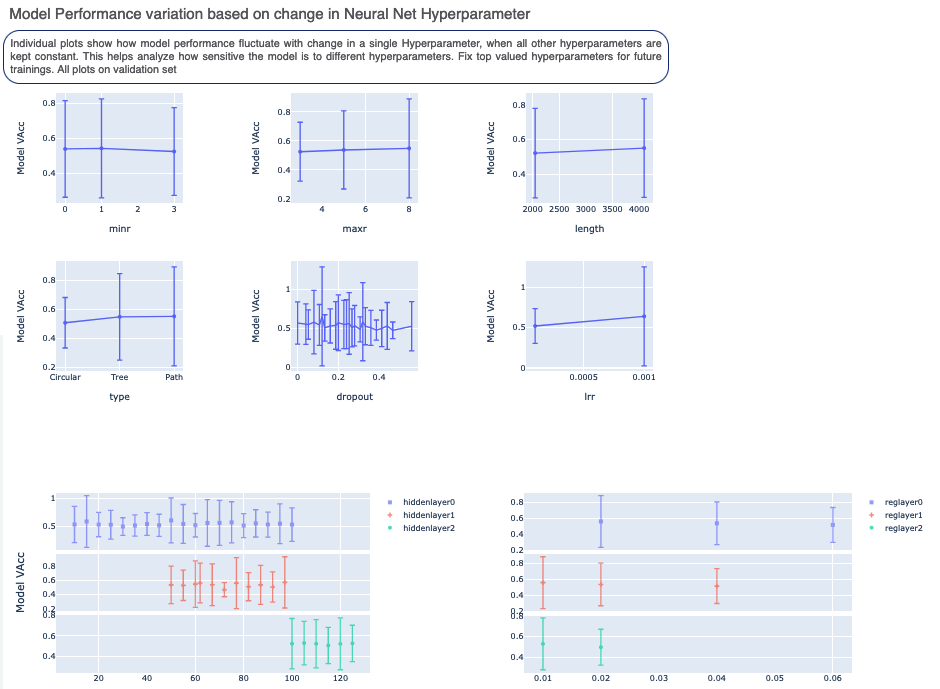

The graphs show the Accuracy on the validation set for different values of the cheminformatics and neural network hyperparameters. It helps us analyze how sensitive the different hyperparameter values are and plan future model builds accordingly. For instance, the graph below shows that dropout of 0.1 and maxr of 5 are better choices for parameters in future model build runs.

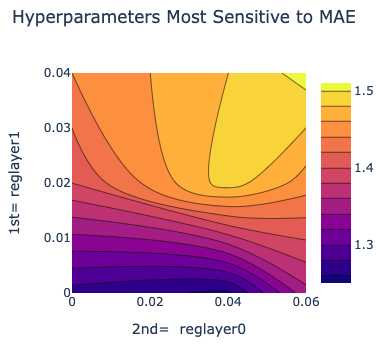

There is also a plot between the top two most sensitive hyperparameters. In the example below, the top two most sensitive parameters are the regularisation 1 and regularisation 0. Choosing value around the minima in the MAE heatmap (0 for reglayer1 and 0.04 for reglayer2), will build better models in future runs.

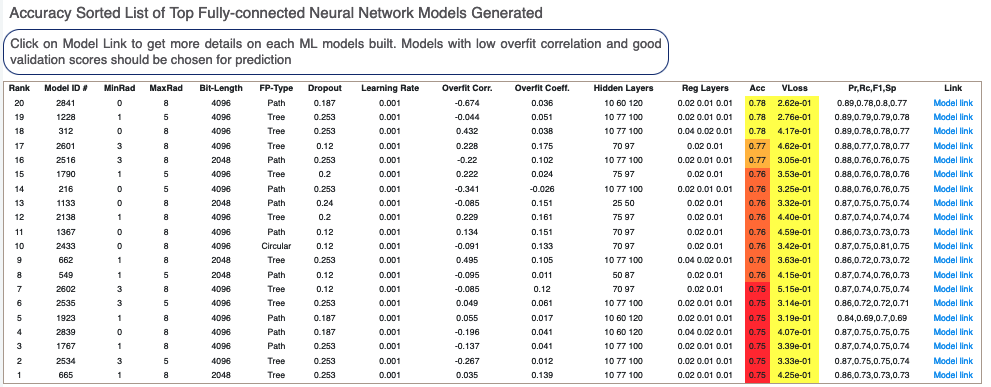

Next, we tabulate the list of all models built by the fully connected network. These models are sorted by Acc Score (on validation data). On the face of it, the first row should be the best model since it has the least error on the validation data. But there can be several other factors besides the best Acc to determine this, starting with Loss in the next column. Hence, lets look at a sample model by clicking the ‘Model Link’. This will take you to a new Floe report page.

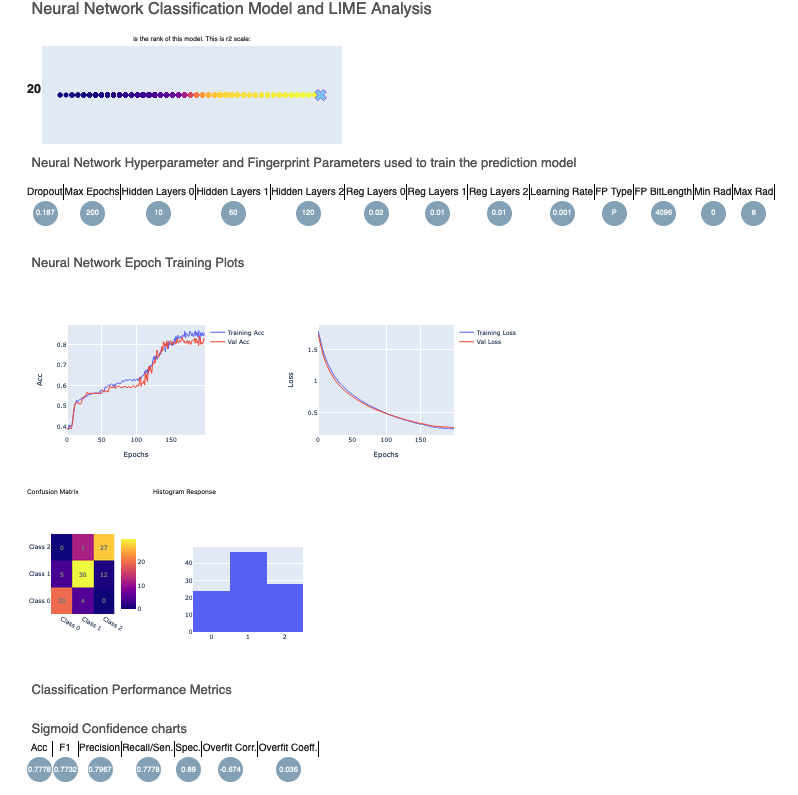

In the image for the top ranked model, we have a linear color scale showing the rank. We also have the cheminformatics and machine learning parameters the model was trained on.

Let’s look at the training curves under ‘Neural Network Epoch Training Plots’. We see that the training and validation Acc is relatively close. Indicating no overfitting. Although the confusion matrix and F1, Precision, Recall scores shown below are not bad, it is best we look at other models as well.

In contrast, here is the picture to model link 5. We see that the training and validation Acc follow a similar trend which is a good sign of not overfitting. Had they been diverging, we might have to go back and tweak parameters such as number of hidden layer nodes, dropouts, regularizers etc. If there were too much fluctuation in the graph it would suggest we need a slower learning rate. The confusion matrix and performance metrics look reasonable as well making Model 5 a good candidate to predict NegIC50 value of unknown molecules.

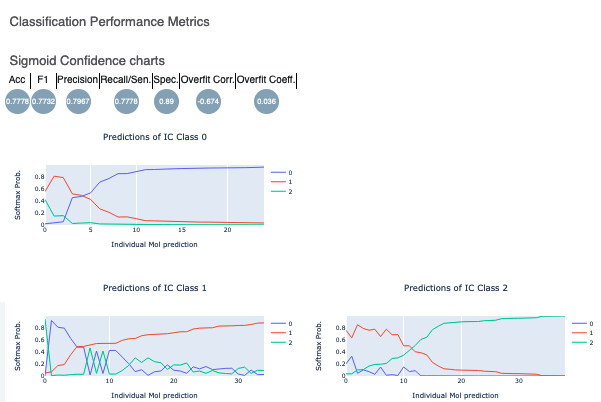

Lastly, the Sigmoid Confidence Chart plots show the discernability of the final sigmoid layer of the neural network. As shown in the diagram, for the first graph, the IC Class zero has a greater softmax probability than either 1 or 2. The same holds of the prediction of the other two IC Classes. These plots illustrate that the sigmoid layer is confident in its prediction on the validation data. This is another sign of good model training.

Below that, click on the interesting molecule to see the annotated explainer of the machine learning model.

While this tutorial shows how to build classification models, building an optimized model is a non-trivial task. Refer to the How-to-Guide on building Optimal Models.

After we have found a model choice, we can use it to predict and or validate against unseen molecules. Go to the next tutorial Use Pretrained Model to predict generic property of molecules to do this.